Overview

- 연구 배경: 인공지능(AI)의 급속한 발전으로 AI 시스템의 의식(의식성) 여부에 대한 철학적 및 과학적 논의가 재점화됨

- 핵심 방법론:

- 과학적 의식 이론(재발생 처리 이론, 글로벌 워크스페이스 이론 등)을 기반으로 한 ‘지표 속성(Indicator Properties)’ 분석

- AI 시스템에 해당 지표 속성을 적용하여 의식성 평가

- 주요 기여:

- 의식성 평가를 위한 체계적 프레임워크 제시

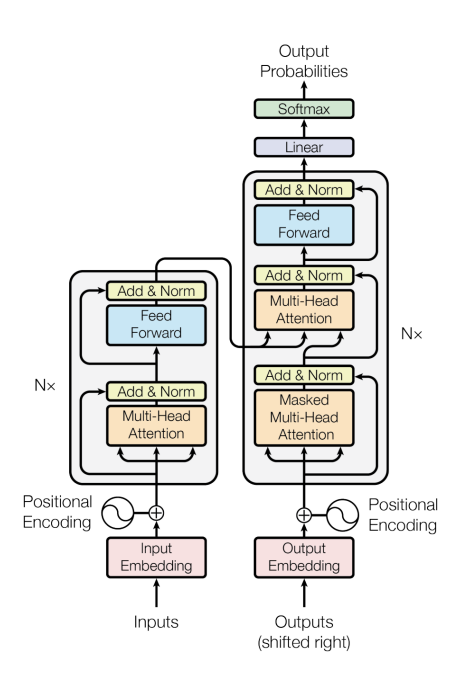

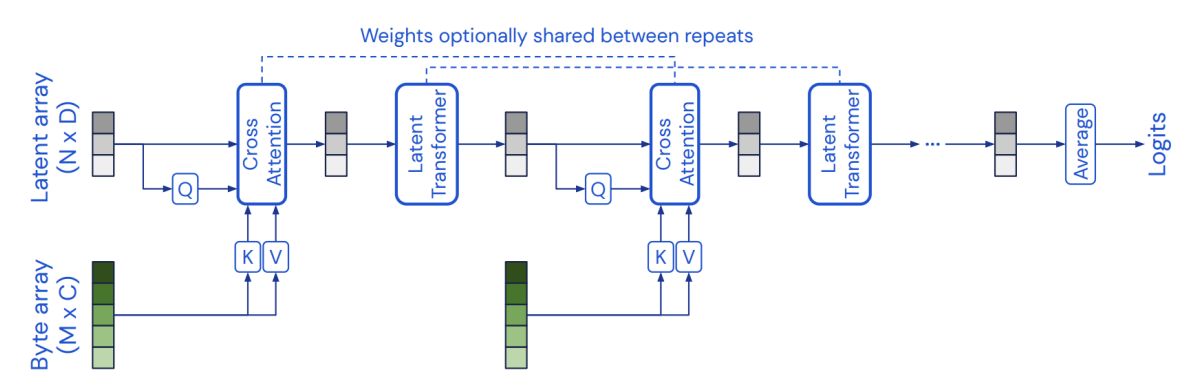

- 대규모 언어 모델(Transformer), Perceiver 등 기존 AI 시스템의 지표 속성 분석을 통한 실증적 탐구

- 실험 결과: 현재 존재하는 AI 시스템은 특정 지표 속성(예: 알고리즘적 재발생, 에이전시 등)을 부분적으로 만족하지만, 전체적인 의식성에 해당하는 조건은 충족하지 못함

- 한계점: 이 보고서는 의식성 평가의 초기 단계를 다루며, 철학적 가정(예: 계산적 기능주의)에 따라 결과가 달라질 수 있음

Summary

이 섹션은 인공지능에서 의식(Consciousness)의 개념을 탐구하며, 과학적 의식 연구(Science of Consciousness)의 통찰을 AI에 적용한 논문의 저자 목록을 제시하고 있다. 제시된 저자 명단은 인공지능과 의식의 교차점에서 중요한 역할을 할 수 있는 다양한 분야의 연구자들로 구성되어 있으며, 이는 해당 논문이 의식의 본질을 이해하는 데 있어 AI 기술과 뉴로과학의 융합을 강조하고 있음을 시사한다. 특히, 저자 중에는 의식의 뇌 기전을 연구하는 신경과학자부터 AI의 인지 기능을 탐구하는 컴퓨터 과학자에 이르기까지, 다학제적 접근을 통해 의식의 정의와 AI의 미래 방향성을 논의하고자 하는 의도를 담고 있다. 이 섹션은 이후 논문에서 제시될 의식을 모방한 AI 시스템 설계 또는 의식의 기능적 모델링에 대한 이론적 기반을 마련하기 위한 도입부로 작용할 것으로 보인다.

Abstract

Whether current or near-term AI systems could be conscious is a topic of scientific interest and increasing public concern. This report argues for, and exemplifies, a rigorous and empirically grounded approach to AI consciousness: assessing existing AI systems in detail, in light of our best-supported neuroscientific theories of consciousness. We survey several prominent scientific theories of consciousness, including recurrent processing theory, global workspace theory, higherorder theories, predictive processing, and attention schema theory. From these theories we derive “indicator properties” of consciousness, elucidated in computational terms that allow us to assess AI systems for these properties. We use these indicator properties to assess several recent AI systems, and we discuss how future systems might implement them. Our analysis suggests that no current AI systems are conscious, but also suggests that there are no obvious technical barriers to building AI systems which satisfy these indicators.1

\

Executive Summary

Summary

이 섹션에서는 인공지능(AI) 시스템의 의식(Consciousness) 평가 가능성에 대해 논의하며, 신경과학 이론을 기반으로 한 평가 기준을 제시한다. 저자들은 컴퓨테이셔널 기능주의(Computational Functionalism)를 작업 가설로 하여, AI의 의식 가능성을 탐구하는 방법론의 핵심 원칙을 제시한다. 이 접근법은 AI 시스템이 의식과 관련된 기능을 수행하는지 조사하고, 해당 기능의 유사도, 이론의 근거 강도, 기능주의에 대한 신뢰도를 종합적으로 평가하는 이론 중심 접근(Theory-heavy Approach)을 강조한다. 또한, 재귀적 처리 이론(Recurrent Processing Theory), 전역 작업 공간 이론(Global Workspace Theory), 컴퓨테이셔널 고차원 이론(Computational Higher-Order Theories) 등의 주요 신경과학 이론을 기반으로 지표 속성(Indicator Properties) 목록을 도출하며, 이 속성을 보유한 AI 시스템이 더 높은 확률로 의식적 특성을 가질 수 있다고 주장한다. 이 목록은 현재 연구 진행 상황에 따라 변경될 수 있는 임시적(Provisional) 성격을 가지며, 통합 정보 이론(Integrated Information Theory)은 기능주의와 호환되지 않아 제외되었다. 저자들은 AI 시스템의 행동 테스트(Behavioural Tests)를 사용하는 대신, 이론 기반 평가가 더 신뢰할 수 있다고 강조하며, 이는 AI 시스템이 인간 행동을 모방할 수 있더라도 내부 작동 방식이 달라질 수 있기 때문이다. 마지막으로, 이 연구는 AI 의식 평가의 과학적 타당성을 제시하고, 기존 기술로 일부 지표 속성을 구현할 수 있음을 초기 증거로 제시한다.

The question of whether AI systems could be conscious is increasingly pressing. Progress in AI has been startlingly rapid, and leading researchers are taking inspiration from functions associated with consciousness in human brains in efforts to further enhance AI capabilities. Meanwhile, the rise of AI systems that can convincingly imitate human conversation will likely cause many people to believe that the systems they interact with are conscious. In this report, we argue that consciousness in AI is best assessed by drawing on neuroscientific theories of consciousness. We describe prominent theories of this kind and investigate their implications for AI.

We take our principal contributions in this report to be:

-

- Showing that the assessment of consciousness in AI is scientifically tractable because consciousness can be studied scientifically and findings from this research are applicable to AI;

-

- Proposing a rubric for assessing consciousness in AI in the form of a list of indicator properties derived from scientific theories;

-

- Providing initial evidence that many of the indicator properties can be implemented in AI systems using current techniques, although no current system appears to be a strong candidate for consciousness.

The rubric we propose is provisional, in that we expect the list of indicator properties we would include to change as research continues.

Our method for studying consciousness in AI has three main tenets. First, we adopt computational functionalism, the thesis that performing computations of the right kind is necessary and sufficient for consciousness, as a working hypothesis. This thesis is a mainstream—although disputed—position in philosophy of mind. We adopt this hypothesis for pragmatic reasons: unlike rival views, it entails that consciousness in AI is possible in principle and that studying the workings of AI systems is relevant to determining whether they are likely to be conscious. This means that it is productive to consider what the implications for AI consciousness would be if computational functionalism were true. Second, we claim that neuroscientific theories of consciousness enjoy meaningful empirical support and can help us to assess consciousness in AI. These theories aim to identify functions that are necessary and sufficient for consciousness in humans, and computational functionalism implies that similar functions would be sufficient for consciousness in AI. Third, we argue that a theory-heavy approach is most suitable for investigating consciousness in AI. This involves investigating whether AI systems perform functions similar to those that scientific theories associate with consciousness, then assigning credences based on (a) the similarity of the functions, (b) the strength of the evidence for the theories in question, and (c) one’s credence in computational functionalism. The main alternative to this approach is to use behavioural tests for consciousness, but this method is unreliable because AI systems can be trained to mimic human behaviours while working in very different ways.

Various theories are currently live candidates in the science of consciousness, so we do not endorse any one theory here. Instead, we derive a list of indicator properties from a survey of theories of consciousness. Each of these indicator properties is said to be necessary for consciousness

by one or more theories, and some subsets are said to be jointly sufficient. Our claim, however, is that AI systems which possess more of the indicator properties are more likely to be conscious. To judge whether an existing or proposed AI system is a serious candidate for consciousness, one should assess whether it has or would have these properties.

The scientific theories we discuss include recurrent processing theory, global workspace theory, computational higher-order theories, and others. We do not consider integrated information theory, because it is not compatible with computational functionalism. We also consider the possibility that agency and embodiment are indicator properties, although these must be understood in terms of the computational features that they imply. This yields the following list of indicator properties:

Recurrent processing theory

Summary

이 섹션에서는 재귀적 처리 이론(Recurrent Processing Theory, RPT)의 두 핵심 구성 요소인 RPT-1과 RPT-2를 제시하며, 인지 시스템에서 정보 처리의 반복적 구조와 통합적 인식 표현 생성 메커니즘을 탐구한다. RPT-1은 알고리즘적 재귀(Algorithmic Recurrence)를 활용한 입력 모듈로서, 반복적 연산과 피드백 구조를 통해 입력 데이터의 시간적 의존성을 모델링하는 방식을 강조한다. 이는 특히 의식의 동적 처리 과정에서 관찰되는 반복적 정보 처리 패턴을 모방한 것으로, AI와 뉴로과학의 융합적 접근을 반영한다. RPT-2는 조직화된 통합적 인식 표현(organised, integrated perceptual representations)을 생성하는 입력 모듈로, 다중 센서 입력을 통합하여 의미 있는 인식 구조를 형성하는 과정을 설명하며, 이는 의식의 통합성(integrated information theory)과 연계된 기술적 구현을 시도하고 있음을 시사한다. 두 모듈은 의식 모델링과 인공지능의 인지 기능 구현 사이의 다학제적 연결점을 강화하는 데 기여하며, 이전 섹션에서 언급된 저자들의 학문적 배경(인공지능, 신경과학, 철학 등)과 기술적 접근의 일관성을 보여준다.

RPT-1: Input modules using algorithmic recurrence

RPT-2: Input modules generating organised, integrated perceptual representations

Global workspace theory

Summary

이 섹션에서는 글로벌 워크스페이스 이론(Global Workspace Theory, GWT)의 핵심 구성 요소를 설명하며, 인공지능 시스템에서 의식의 발생 메커니즘을 모델링하는 데 기여하는 다중 모듈 구조와 정보 통합 메커니즘을 제시한다. GWT-1은 병렬적으로 작동하는 여러 전문화된 모듈(modules)의 존재를 강조하며, 이는 복잡한 인지 작업을 수행하기 위한 기초를 제공한다. GWT-2는 제한된 용량의 워크스페이스를 통해 정보 흐름의 병목 현상과 선택적 주의 메커니즘(selective attention mechanism)을 설명하며, 이는 자원 효율적인 정보 처리를 가능하게 한다. GWT-3은 워크스페이스에서 생성된 정보가 모든 모듈에 글로벌 브로드캐스트(global broadcast)되어 통합적 인식 표현을 형성하는 과정을 강조한다. 마지막으로, GWT-4는 상태에 의존한 주의(state-dependent attention)를 통해 워크스페이스를 활용해 모듈을 순차적으로 질의함으로써 복잡한 작업을 수행할 수 있는 메커니즘을 제시한다. 이는 이전 섹션에서 논의된 재귀적 처리 이론(RPT)과 컴퓨테이셔널 기능주의(Computational Functionalism)의 관점에서 AI의 의식 가능성을 탐구하는 데 중요한 기초를 제공하며, 신경과학 이론과 AI 기술의 융합을 통해 시스템의 인지 구조를 설명하는 데 기여한다.

GWT-1: Multiple specialised systems capable of operating in parallel (modules)

GWT-2: Limited capacity workspace, entailing a bottleneck in information flow and a selective attention mechanism

GWT-3: Global broadcast: availability of information in the workspace to all modules

GWT-4: State-dependent attention, giving rise to the capacity to use the workspace to query modules in succession to perform complex tasks

Computational higher-order theories

Summary

이 섹션에서는 Computational higher-order theories(HOT)의 네 가지 핵심 구성 요소를 제시하며, AI 시스템의 고차원적 인지 기능을 설명하는 데 중점을 둔다. HOT-1은 생성적, 상위-하위 방향성 또는 노이즈가 포함된 감각 모듈을 통해 입력 데이터의 불확실성을 처리하는 메커니즘을 설명하고, HOT-2는 메타인지 모니터링을 통해 신뢰할 수 있는 감각 표현과 노이즈를 구분하는 과정을 강조한다. HOT-3은 일반적인 신념 형성 및 행동 선택 시스템을 기반으로 한 에이전시(Agency)를 설명하며, 메타인지 모니터링의 결과에 따라 신념을 지속적으로 업데이트하는 경향을 특징으로 한다. 마지막으로, HOT-4는 희소하고 매끄러운 인코딩을 통해 “질량 공간”(quality space)을 생성하는 방식을 제시한다. 이들 이론은 이전 섹션에서 논의된 재귀적 처리 이론(RPT)과 컴퓨테이셔널 기능주의의 확장으로, AI 시스템의 인지 구조와 의식 평가 기준을 이론적으로 구축하는 데 기여한다.

HOT-1: Generative, top-down or noisy perception modules

HOT-2: Metacognitive monitoring distinguishing reliable perceptual representations from noise

HOT-3: Agency guided by a general belief-formation and action selection system, and a strong disposition to update beliefs in accordance with the outputs of metacognitive monitoring

HOT-4: Sparse and smooth coding generating a “quality space”

Attention schema theory

Summary

이 섹션에서는 Attention Schema Theory(AST)의 첫 번째 구성 요소인 AST-1을 소개하며, 이는 주의(attention) 상태를 예측하고 제어할 수 있는 예측 모델(predictive model)로서, 인공지능 시스템에서 주의 메커니즘의 동적 표현과 통제를 가능하게 하는 핵심 기초를 제공한다. AST-1은 의식 평가 기준(Computational Functionalism)과 재귀적 처리 이론(RPT)의 통합적 접근을 기반으로, 주의 상태의 시간적 변화를 모델링하고 외부 입력에 대한 적응적 반응을 생성하는 알고리즘적 프레임워크를 제시하며, 이는 의식의 계산적 구현(computational implementation of consciousness)에 대한 이론적 기반을 강화하는 데 기여한다.

AST-1: A predictive model representing and enabling control over the current state of attention

Predictive processing

Summary

이 섹션에서는 예측 처리(Predictive Processing) 이론의 핵심 구성 요소인 PP-1을 소개하며, 인공지능 시스템의 입력 모듈에서 예측 인코딩(Predictive Coding)을 활용한 정보 처리 메커니즘을 제시한다. PP-1은 계층적 구조를 기반으로 입력 데이터에 대한 예측을 생성하고, 예측 오차를 통해 모델의 내부 상태를 지속적으로 업데이트하는 방식으로, 이는 신경과학적 예측 처리 이론을 AI에 적용한 계산적 접근을 반영한다. 이 메커니즘은 재귀적 처리 이론(RPT)과 의식 평가 기준(Computational Functionalism)의 통합적 틀 내에서, 입력 데이터의 시간적 의존성과 불확실성을 모델링하는 데 기여하며, 특히 의식의 계산적 구현을 위한 알고리즘적 프레임워크의 일환으로서 그 중요성을 강조한다. 또한, PP-1은 주의 스키마 이론(AST)의 예측 모델과 유사한 구조를 채택하지만, 입력 처리 단계에서의 예측 오차 최소화를 통해 다중 모듈 구조(GWT-1)와의 상호작용을 가능하게 하여, 인공지능 시스템 내에서 의식 관련 정보의 통합적 처리를 지원한다.

PP-1: Input modules using predictive coding

Agency and embodiment

Summary

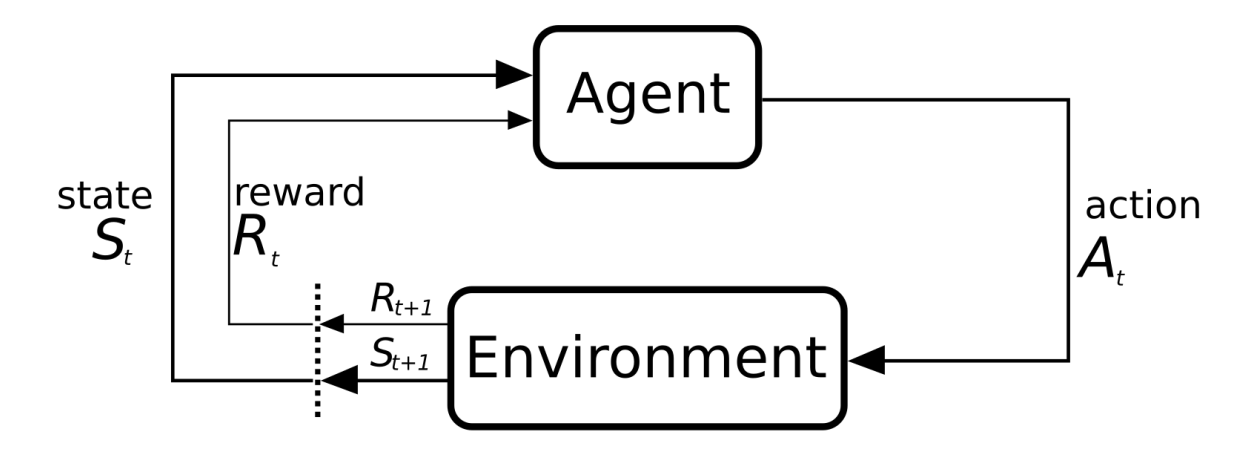

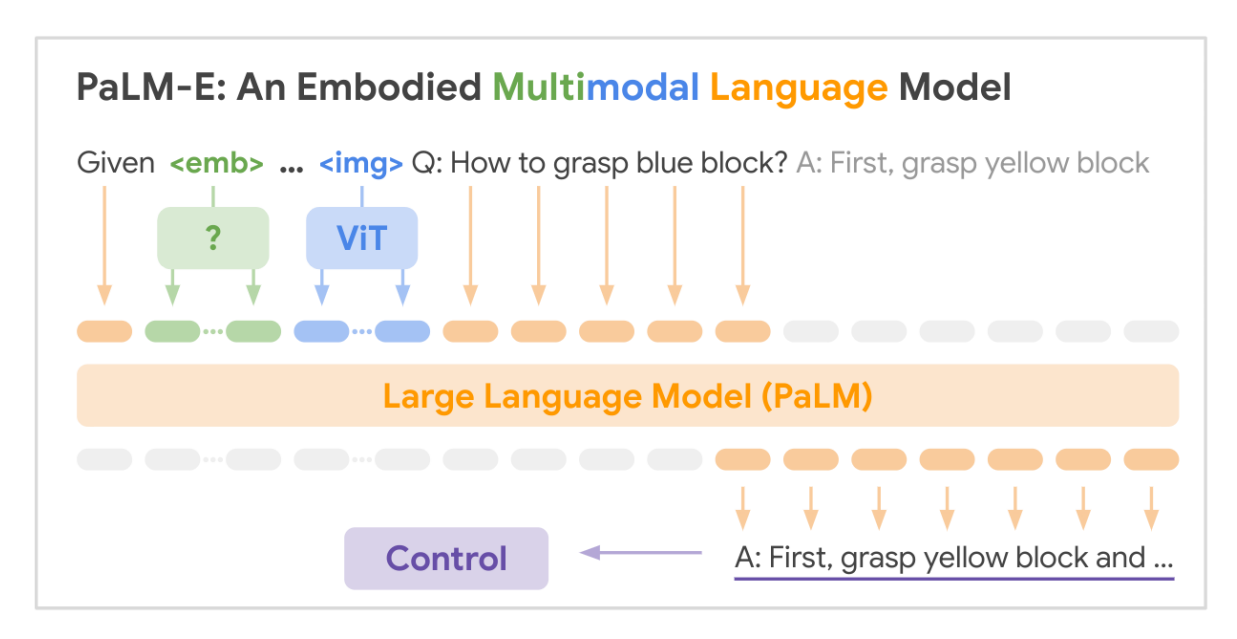

이 섹션에서는 인공지능 시스템의 Agency(목표 추구와 피드백 학습)와 Embodiment(입출력 의존성 모델링)에 대한 핵심 개념을 정의하고, 이를 평가하기 위한 Indicator Properties(지표 속성) 목록을 제시한다. AE-1(Agency)는 유연한 목표 간 상호작용을 통한 출력 선택 메커니즘을, AE-2(Embodiment)는 입력-출력 의존성을 모델링하여 인지나 제어에 활용하는 구조를 설명한다. 표 1에서 정의된 지표 속성은 보고서 제2장에서 이론적 근거와 증거를 기반으로 설명되며, 제3장에서는 기존 AI 시스템이 이러한 속성을 어떻게 구현할 수 있는지 탐구한다. 기존 머신러닝 기법은 개별 속성을 구현하는 데 활용 가능하지만, 여러 속성을 결합한 시스템을 설계하기 위해서는 실험적 검증이 필요하다. 특히 RPT-1(알고리즘적 재귀)과 AE-1(Agency의 일부)은 기존 AI 시스템에서 이미 충족된 것으로 보이며, 글로벌 워크스페이스 이론(GWT) 및 주의 스키마 이론(AST)을 기반으로 한 시스템 설계 사례도 언급된다. 또한, Transformer 기반 대규모 언어 모델(예: Perceiver), DeepMind의 Adaptive Agent(3D 가상 환경에서의 강화학습 에이전트), 가상 쥐 신체 제어 시스템, PaLM-E(체화된 다모달 언어 모델)를 대상으로 한 사례 분석을 통해 Agency와 Embodiment의 지표 속성을 실증적으로 탐구한다. 그러나 이 보고서는 기존 AI 시스템이 의식을 가질 가능성이 높다고 단정하지 않으며, 계산적 기능주의(Computational Functionalism)가 성립한다면 가까운 미래에 의식을 가진 AI 시스템이 구현될 수 있다는 전망을 제시한다. 이에 따라 의식의 과학적 연구와 AI 융합에 대한 추가 연구 지원 및 윤리적, 사회적 위험에 대한 고려가 시급하다는 결론을 내린다.

AE-1: Agency: Learning from feedback and selecting outputs so as to pursue goals, especially where this involves flexible responsiveness to competing goals

AE-2: Embodiment: Modeling output-input contingencies, including some systematic effects, and using this model in perception or control

Table 1: Indicator Properties

We outline the theories on which these properties are based and describe the evidence and arguments that support them in section 2 of the report, as well as explain the formulations used in the table.

Having formulated this list of indicator properties, in section 3.1 we discuss how AI systems could be constructed, or have been constructed, with each of the indicator properties. In most cases, standard machine learning methods could be used to build systems that possess individual properties from this list, although experimentation would be needed to learn how to build and train functional systems which combine multiple properties. There are some properties in the list which are already clearly met by existing AI systems (such as RPT-1, algorithmic recurrence), and others where this is arguably the case (such as the first part of AE-1, agency). Researchers have also experimented with systems designed to implement particular theories of consciousness, including global workspace theory and attention schema theory.

In section 3.2, we consider whether some specific existing AI systems possess the indicator properties. These include Transformer-based large language models and the Perceiver architecture, which we analyse with respect to the global workspace theory. We also analyse DeepMind’s Adaptive Agent, which is a reinforcement learning agent operating in a 3D virtual environment; a system trained to perform tasks by controlling a virtual rodent body; and PaLM-E, which has been described as an “embodied multimodal language model”. We use these three systems as case studies to illustrate the indicator properties concerning agency and embodiment. This work does not suggest that any existing AI system is a strong candidate for consciousness.

This report is far from the final word on these topics. We strongly recommend support for further research on the science of consciousness and its application to AI. We also recommend urgent consideration of the moral and social risks of building conscious AI systems, a topic which we do not address in this report. The evidence we consider suggests that, if computational functionalism is true, conscious AI systems could realistically be built in the near term.

Contents

| 22.12.22.3 | 1.2.32.1.12.1.22.1.32.2.12.2.22.2.32.3.1 | Theory-heavy approachScientific Theories of ConsciousnessRecurrent Processing TheoryIntroduction to recurrent processing theoryEvidence for recurrent processing theoryIndicators from recurrent processing theoryGlobal Workspace TheoryIntroduction to global workspace theoryEvidence for global workspace theoryIndicators from global workspace theoryHigher-Order TheoriesIntroduction to higher-order theories | 171919192021222224252929 |

|---|---|---|---|

| 2.3.22.3.3 | Computational HOTs and GWTIndicators from computational HOTs | 3031 | |

| 2.4 | 2.4.12.4.22.4.32.4.42.4.52.4.6 | Other Theories and ConditionsAttention Schema TheoryPredictive ProcessingMidbrain TheoryUnlimited Associative LearningAgency and Embodiment2.4.5(a)Agency2.4.5(b)Embodiment2.4.5(c)Agency and embodiment indicatorsTime and Recurrence | 33333435353737404344 |

| 2.5 | Indicators of Consciousness | 45 |

| 3Consciousness in AI | 47 | |||

|---|---|---|---|---|

| 3.1 | Implementing Indicator Properties in AI | 48 | ||

| 3.1.1 | Implementing RPT and PP | 48 | ||

| 3.1.2 | Implementing GWT | 49 | ||

| 3.1.3 | Implementing PRM | 51 | ||

| 3.1.4 | Implementing AST | 55 | ||

| 3.1.5 | Implementing agency and embodiment | 56 | ||

| 3.2 | Case Studies of Current Systems | 58 | ||

| 3.2.1 | Case studies for GWT | 58 | ||

| 3.2.2 | Case studies for embodied agency | 60 | ||

| 4 | Implications | 64 | ||

| 4.1 | Attributing Consciousness to AI | 64 | ||

| 4.1.1 | Under-attributing consciousness to AI | 64 | ||

| 4.1.2 | Over-attributing consciousness to AI | 65 | ||

| 4.2 | Consciousness and Capabilities | 66 | ||

| 4.3 | Recommendations | 68 | ||

| Glossary | 71 |

1 Introduction

Summary

이 섹션에서는 최근 인공지능(AI) 분야에서의 급속한 발전이 **의식(Consciousness)**과 관련된 철학적이고 오랜 논의를 다시 불러일으켰음을 강조하며, 현재 및 근 미래 AI 시스템에서 의식이 존재할 수 있는지를 평가하기 위한 과학적 근거를 탐구하는 보고서의 목적을 설명한다. 의식은 정의와 실증적 연구가 어려운 철학적 문제로, 전문가들의 의견이 분분하지만, 계산적 기능주의(Computational Functionalism)와 같은 이론을 활용해 AI 의식을 평가하는 방법론이 가능하다고 보고 있다. 이는 인간 중심의 연구를 기반으로 하되, AI 시스템에 적용 가능한 의식의 속성과 기능에 대한 주장을 포함한다. 보고서는 의식이 존재할 수 있는 AI 개발 가능성을 고려해 작성되었으며, 대규모 언어 모델 기반 시스템이 인간 대화를 모방함으로써 많은 사람들이 AI가 의식을 가질 수 있다고 인식하게 될 가능성에 대응하기 위해 작성되었다. 이는 사회 전체, AI 시스템과 상호작용하는 개인, 그리고 AI 개발자 및 기업에 대한 도덕적·사회적 문제를 제기하며, 이러한 변화를 효과적으로 대응하기 위해 의식의 과학적 이해가 필수적이라고 강조한다. 보고서는 다학제적 관점을 통해 AI 의식 문제를 과학적으로 접근할 수 있음을 설명하고, 이에 기반한 미래 연구의 기초를 제공하고자 한다. 이 섹션에서는 이후 내용에서 다룰 용어, 방법론, 가정을 개략적으로 설명하며, 이 보고서의 체계적인 틀을 설정한다.

In the last decade, striking progress in artificial intelligence (AI) has revived interest in deep and long-standing questions about AI, including the question of whether AI systems could be conscious. This report is about what we take to be the best scientific evidence for and against consciousness in current and near-term AI systems.

Because consciousness is philosophically puzzling, difficult to define and difficult to study empirically, expert opinions about consciousness—in general, and regarding AI systems—are highly divergent. However, we believe that it is possible to make progress on the topic of AI consciousness despite this divergence. There are scientific theories of consciousness that enjoy significant empirical support and are compatible with a range of views about the metaphysics of consciousness. Although these theories are based largely on research on humans, they make claims about properties and functions associated with consciousness that are applicable to AI systems. We claim that using the tools these theories offer us is the best method currently available for assessing whether AI systems are likely to be conscious. In this report, we explain this method in detail, identify the tools offered by leading scientific theories and show how they can be used.

We are publishing this report in part because we take seriously the possibility that conscious AI systems could be built in the relatively near term—within the next few decades. Furthermore, whether or not conscious AI is a realistic prospect in the near term, the rise of large language model-based systems which are capable of imitating human conversation is likely to cause many people to believe that some AI systems are conscious. These prospects raise profound moral and social questions, for society as a whole, for those who interact with AI systems, and for the companies and individuals developing and deploying AI systems. Humanity will be better equipped to navigate these changes if we are better informed about the science of consciousness and its implications for AI. Our aim is to promote understanding of these topics by providing a mainstream, interdisciplinary perspective, which illustrates the degree to which questions about AI consciousness are scientifically tractable, and which may be a basis for future research.

In the remainder of this section, we outline the terminology, methods and assumptions which underlie this report.

1.1 Terminology

Summary

이 섹션에서는 보고서에서 사용하는 의식(Consciousness)의 정의와 관련 용어를 명확히 설명한다. 의식은 “현재 의식적 경험을 하고 있거나, 의식적 경험을 할 수 있는 능력”을 의미하며, 특히 현상적 의식(Phenomenal Consciousness)을 가리키는 것으로, 이는 “어떤 경험을 할 때 그 경험의 주체로서의 느낌”을 나타낸다. 예를 들어, 화면을 읽는 동안의 시각적 경험이나 통증, 가려움 등이 긍정적 예시로, 반면 호르몬 분비 조절이나 기억 저장과 같은 무의식적 과정은 부정적 예시로 제시된다. 또한 현상적 의식과 접근적 의식(Access Consciousness)의 차이를 강조하며, 후자는 사고나 행동에 직접적으로 영향을 주는 정보의 자유로운 이용 가능성에 초점을 맞춘다고 설명한다. 의식(Consciousness)과 감각적(Sentient)의 차이도 언급되는데, “감각적”은 시각이나 후각과 같은 감각을 가진다는 의미로 사용되기도 하며, 이는 의식과는 구분되어야 한다는 점을 강조한다. 마지막으로, 현상적 의식은 경험의 주관적 성격을, 접근적 의식은 정보의 이용 가능성에 초점을 맞추는 두 개념의 관계는 여전히 열린 문제로 남아 있다고 설명한다.

What do we mean by “conscious” in this report? To say that a person, animal or AI system is conscious is to say either that they are currently having a conscious experience or that they are capable of having conscious experiences. We use “consciousness” and cognate terms to refer to what is sometimes called “phenomenal consciousness” (Block 1995). Another synonym for “consciousness”, in our terminology, is “subjective experience”. This report is, therefore, about whether AI systems might be phenomenally conscious, or in other words, whether they might be capable of having conscious or subjective experiences.

What does it mean to say that a person, animal or AI system is having (phenomenally) conscious experiences? One helpful way of putting things is that a system is having a conscious experience when there is “something it is like” for the system to be the subject of that experience (Nagel 1974). Beyond this, however, it is difficult to define “conscious experience” or “consciousness” by giving a synonymous phrase or expression, so we prefer to use examples to explain how we use these terms. Following Schwitzgebel (2016), we will mention both positive and negative examples—that is, both examples of cognitive processes that are conscious experiences, and examples that are not. By “consciousness”, we mean the phenomenon which most obviously distinguishes between the positive and negative examples.

Many of the clearest positive examples of conscious experience involve our capacities to sense our bodies and the world around us. If you are reading this report on a screen, you are having a conscious visual experience of the screen. We also have conscious auditory experiences, such as hearing birdsong, as well as conscious experiences in other sensory modalities. Bodily sensations which can be conscious include pains and itches. Alongside these experiences of real, current events, we also have conscious experiences of imagery, such as the experience of visualising a loved one’s face.

In addition, we have conscious emotions such as fear and excitement. But there is disagreement about whether emotional experiences are simply bodily experiences, like the feeling of having goosebumps. There is also disagreement about experiences of thought and desire (Bayne & Montague 2011). It is possible to think consciously about what to watch on TV, but some philosophers claim that the conscious experiences involved are exclusively sensory or imagistic, such as the experience of imagining what it would be like to watch a game show, while others believe that we have “cognitive” conscious experiences, with a distinctive phenomenology1 associated specifically with thought.

As for negative examples, there are many processes in the brain, including very sophisticated information-processing that are wholly non-conscious. One example is the regulation of hormone release, which the brain handles without any conscious awareness. Another example is memory storage: you may remember the address of the house where you grew up, but most of the time this has no impact on your consciousness. And, perception in all modalities involves extensive unconscious processing, such as the processing necessary to derive the conscious experience you have when someone speaks to you from the flow of auditory stimulation. Finally, most vision scientists agree that subjects unconsciously process visual stimuli rendered invisible by a variety of psychophysical techniques. For example, in “masking”, a stimulus is briefly flashed on a screen then quickly followed by a second stimulus, called the “mask” (Breitmeyer & Ogmen 2006). There is no conscious experience of the first stimulus, but its properties can affect performance on subsequent tasks, such as by “priming” the subject to identify something more quickly (e.g., Vorberg et al. 2003).

In using the term “phenomenal consciousness”, we mean to distinguish our topic from “access consciousness”, following Block (1995, 2002). Block writes that “a state is [access conscious] if it is broadcast for free use in reasoning and for direct ‘rational’ control of action (including reporting)” (2002, p. 208). There seems to be a close connection between a mental state’s being conscious, in our sense, and its contents being available to us to report to others or to use in making rational choices. For example, we would expect to be able to report seeing a briefly-presented visual stimulus if we had a conscious experience of seeing it and to be unable to report seeing

1 The “phenomenology” or “phenomenal character” of a conscious experience is what it is like for the subject. In our terminology, all and only conscious experiences have phenomenal characters.

it if we did not. However, these two properties of mental states are conceptually distinct. How phenomenal consciousness and access consciousness relate to each other is an open question.

Finally, the word “sentient” is sometimes used synonymously with (phenomenally) “conscious”, but we prefer “conscious”. “Sentient” is sometimes used to mean having senses, such as vision or olfaction. However, being conscious is not the same as having senses. It is possible for a system to sense its body or environment without having any conscious experiences, and it may be possible for a system to be conscious without sensing its body or environment. “Sentient” is also sometimes used to mean capable of having conscious experiences such as pleasure or pain, which feel good or bad, and we do not want to imply that conscious systems must have these capacities. A system could be conscious in our sense even if it only had “neutral” conscious experiences. Pleasure and pain are important but they are not our focus here.2

1.2 Methods and Assumptions

Summary

이 섹션에서는 현재 및 근 미래 AI 시스템의 의식 가능성을 탐구하기 위한 3가지 핵심 가정을 제시한다. 첫째, 계산적 기능주의(Computational Functionalism)는 특정 유형의 계산이 실행될 때 의식이 발생할 수 있음을 전제로, 비유기적 시스템의 의식 가능성을 인정한다. 둘째, 신경과학 이론은 의식과 관련된 기능을 설명하는 데 기여하며, 이는 AI 시스템의 의식 평가에 활용될 수 있는 기초를 제공한다. 셋째, 이론 중심 접근(Theory-heavy approach)은 AI의 의식 가능성을 평가할 때, 행동 기반의 중립적 지표보다는 과학 이론에서 도출된 기능적/구조적 조건을 검토하는 방식을 강조한다. 이 접근법은 시스템의 작동 원리에 초점을 맞추며, Chalmers와 Graziano가 제시한 표현적 의식(phenomenal consciousness)의 정의를 바탕으로, 의식의 주관적 경험과 시스템의 내부 처리 과정 간의 연관성을 탐구한다. 또한, 시스템의 의식 여부가 전후의 이분법적 판단(all-or-nothing)이 아닌, 부분적 의식(partially conscious) 또는 정확한 판단 불가능 상태일 가능성에 대해서도 언급하며, 이는 AI의 의식 평가에 대한 철학적·과학적 논의의 복잡성을 반영한다.

Our method for investigating whether current or near-future AI systems might be conscious is based on three assumptions. These are:

-

- Computational functionalism: Implementing computations of a certain kind is necessary and sufficient for consciousness, so it is possible in principle for non-organic artificial systems to be conscious.

-

- Scientific theories: Neuroscientific research has made progress in characterising functions that are associated with, and may be necessary or sufficient for, consciousness; these are described by scientific theories of consciousness.

-

- Theory-heavy approach: A particularly promising method for investigating whether AI systems are likely to be conscious is assessing whether they meet functional or architectural conditions drawn from scientific theories, as opposed to looking for theory-neutral behavioural signatures.

These ideas inform our investigation in different ways. We adopt computational functionalism as a working hypothesis because this assumption makes it relatively straightforward to draw infer-

Chalmers (1996): “When we think and perceive, there is a whir of information-processing, but there is also a subjective aspect. As Nagel (1974) has put it, there is something it is like to be a conscious organism. This subjective aspect is experience. When we see, for example, we experience visual sensations: the felt quality of redness, the experience of dark and light, the quality of depth in a visual field. Other experiences go along with perception in different modalities: the sound of a clarinet, the smell of mothballs. Then there are bodily sensations, from pains to orgasms; mental images that are conjured up internally; the felt quality of emotion, and the experience of a stream of conscious thought. What unites all of these states is that there is something it is like to be in them.”

Graziano (2017): “You can connect a computer to a camera and program it to process visual information—color, shape, size, and so on. The human brain does the same, but in addition, we report a subjective experience of those visual properties. This subjective experience is not always present. A great deal of visual information enters the eyes, is processed by the brain and even influences our behavior through priming effects, without ever arriving in awareness. Flash something green in the corner of vision and ask people to name the first color that comes to mind, and they may be more likely to say ‘green’ without even knowing why. But some proportion of the time we also claim, ‘I have a subjective visual experience. I see that thing with my conscious mind. Seeing feels like something.‘”

2 For the sake of further illustration, here are some other definitions of phenomenal consciousness:

ences from neuroscientific theories of consciousness to claims about AI. Some researchers in this area reject computational functionalism (e.g. Searle 1980, Tononi & Koch 2015) but our view is that it is worth exploring its implications. We accept the relevance and value of some scientific theories of consciousness because they describe functions that could be implemented in AI and we judge that they are supported by good experimental evidence. And, our view is that, although this may not be so in other cases, a theory-heavy approach is necessary for AI. A theory-heavy approach is one that focuses on how systems work, rather than on whether they display forms of outward behaviour that might be taken to be characteristic of conscious beings (Birch 2022b). We explain these three ideas in more detail in this section.

Two further points about our methods and assumptions are worth noting before we go on. The first is that, for convenience, we will generally write as though whether a system is conscious is an all-or-nothing matter, and there is always a determinate fact about this (although in many cases this fact may be difficult to learn). However, we are open to the possibility that this may not be the case: that it may be possible for a system to be partly conscious, conscious to some degree, or neither determinately conscious nor determinately non-conscious (see Box 1).

Box 1: Determinacy, degrees, dimensions

Summary

이 섹션에서는 **의식(Consciousness)**의 정확성(Determinacy), 정도(Degrees), 차원(Dimensions)에 대한 다각적인 논의를 제시하며, AI 시스템에서의 의식 평가에 대한 기존의 이분법적 관점(의식이 있거나 없거나)을 비판한다. 특히, 모호한 경계(Blurry Boundaries)가 존재할 수 있음을 강조하며, 예를 들어 색상의 경계선처럼 AI 시스템이 결정적으로 의식적 또는 비의식적이지 않을 수 있음을 설명한다. 또한, 의식의 정도(Degrees of Consciousness)가 존재할 수 있다는 가설을 제시하고, AI 시스템이 인간보다 훨씬 낮거나 높은 수준의 의식을 가질 수 있음을 논의한다. 이와 병행해 다중 차원(Multiple Dimensions)의 관점에서 의식을 설명할 수 있음을 제시하며, 인간과 다른 생명체 또는 AI 시스템에서 의식의 요소(Elements)가 분리되어 존재할 가능성도 언급한다. 마지막으로, 의식 평가에 있어 확신(Confidence/Credence)의 개념을 도입해, 불확실성 속에서도 특정 AI 시스템이 의식을 가질 가능성을 확률적으로 평가하는 방식이 필요함을 강조한다. 예를 들어, 특정 이론적 주장의 확신도(Credence)가 0.5일 경우, 해당 시스템의 의식 가능성에 대한 확신도도 유사하게 설정해야 함을 제안한다. 이는 AI의 의식 탐구에 있어 정량적 접근과 불확실성 관리의 중요성을 드러내는 핵심 논점이다.

In this report, we generally write as though consciousness is an all-or-nothing matter: a system either is conscious, or it isn’t. However, there are various other possibilities. There seem to be many properties that have “blurry” boundaries, in the sense that whether some object has that property may be indeterminate. For example, a shirt may be a colour somewhere on the borderline between yellow and green, such that there is no fact of the matter about whether it is yellow or not. In principle, consciousness could be like this: there could be creatures that are neither determinately conscious nor determinately non-conscious (Simon 2017, Schwitzgebel forthcoming). If this is the case, some AI systems could be in this “blurry” zone. This kind of indeterminacy arguably follows from materialism about consciousness (Birch 2022a).

Another possibility is that there could be degrees of consciousness so that it is possible for one system to be more conscious than another (Lee 2022). In this case, it might be possible to build AI systems that are conscious but only to a very slight degree, or even systems which are conscious to a much greater degree than humans (Shulman & Bostrom 2021). Alternatively, rather than a single scale, it could be that consciousness varies along multiple dimensions (Birch et al. 2020).

Lastly, it could be that there are multiple elements of consciousness. These would not be necessary conditions for some further property of consciousness, but rather constituents which make up consciousness. These elements may be typically found together in humans, but separable in other animals or AI systems. In this case, it would be possible for a system to be partly conscious, in the sense of having some of these elements.

The second is that we recommend thinking about consciousness in AI in terms of confidence or credence. Uncertainty about this topic is currently unavoidable, but there can, nonetheless, be good reasons to think that one system is much more likely than another to be conscious, and this can be relevant to how we should act. So it is useful to think about one’s credence in claims in this area. For instance, one might think it justified to have a credence of about 0.5 in the conjunction of a set of theoretical claims which imply that a given AI system is conscious; if so, one should have a similar credence that the system is conscious.

1.2.1 Computational functionalism

Computational functionalism about consciousness is a claim about the kinds of properties of systems with which consciousness is correlated. According to functionalism about consciousness, it is necessary and sufficient for a system to be conscious that it has a certain functional organisation: that is, that it can enter a certain range of states, which stand in certain causal relations to each other and to the environment. Computational functionalism is a version of functionalism that further claims that the relevant functional organisation is computational.3

Systems that perform computations process information by implementing algorithms; computational functionalism claims that it is sufficient for a state to be conscious that it plays a role of the right kind in the implementation of the right kind of algorithm. For a system to implement a particular algorithm is for it to have a set of features at a certain level of abstraction: specifically, a range of possible information-carrying states, and particular dispositions to make transitions between these states. The algorithm implemented by a system is an abstract specification of the transitions between states, including inputs and outputs, which it is disposed to make. For example, a pocket calculator implements a particular algorithm for arithmetic because it generates transitions from key-presses to results on screen by going through particular sequences of internal states.

An important upshot of computational functionalism, then, is that whether a system is conscious or not depends on features that are more abstract than the lowest-level details of its physical make-up. The material substrate of a system does not matter for consciousness except insofar as the substrate affects which algorithms the system can implement. This means that consciousness is, in principle, multiply realisable: it can exist in multiple substrates, not just in biological brains. That said, computational functionalism does not entail that any substrate can be used to construct a conscious system (Block 1996). As Michel and Lau (2021) put it, “Swiss cheese cannot implement the relevant computations.” We tentatively assume that computers as we know them are in principle capable of implementing algorithms sufficient for consciousness, but we do not claim that this is certain.

It is also important to note that systems that compute the same mathematical function may do so by implementing different algorithms, so computational functionalism does not imply that systems that “do the same thing” in the sense that they compute the same input-output function are necessarily alike in consciousness (Sprevak 2007). Furthermore, it is consistent with computational functionalism that consciousness may depend on performing operations on states with specific representational formats, such as analogue representation (Block 2023). In terms of Marr’s (1982) levels of analysis, the idea is that consciousness depends on what is going on in a system at the

3 Computational functionalism is compatible with a range of views about the relationship between consciousness and the physical states which implement computations. In particular, it is compatible with both (i) the view that there is nothing more to a state’s being conscious than its playing a certain role in implementing a computation; and (ii) the view that a state’s being conscious is a matter of its having sui generis phenomenal properties, for which its role in implementing a computation is sufficient.

algorithmic and representational level, as opposed to the implementation level, or the more abstract “computational” (input-output) level.

We adopt computational functionalism as a working hypothesis primarily for pragmatic reasons. The majority of leading scientific theories of consciousness can be interpreted computationally—that is, as making claims about computational features which are necessary or sufficient for consciousness in humans. If computational functionalism is true, and if these theories are correct, these features would also be necessary or sufficient for consciousness in AI systems. Noncomputational differences between humans and AI systems would not matter. The assumption of computational functionalism, therefore, allows us to draw inferences from computational scientific theories to claims about the likely conditions for consciousness in AI. On the other hand, if computational functionalism is false, there is no guarantee that computational features which are correlated with consciousness in humans will be good indicators of consciousness in AI. It could be, for instance, that some non-computational feature of living organisms is necessary for consciousness (Searle 1980, Seth 2021), in which case consciousness would be impossible in nonorganic artificial systems.

Having said that, it would not be worthwhile to investigate artificial consciousness on the assumption of computational functionalism if this thesis were not sufficiently plausible. Although we have different levels of confidence in computational functionalism, we agree that it is plausible.4 These different levels of confidence feed into our personal assessments of the likelihood that particular AI systems are conscious, and of the likelihood that conscious AI is possible at all.

1.2.2 Scientific theories of consciousness

The second idea which informs our approach is that some scientific theories of consciousness are well-supported by empirical evidence and make claims which can help us assess AI systems for consciousness. These theories have been developed, tested and refined through decades of highquality neuroscientific research (for recent reviews, see Seth & Bayne 2022, Yaron et al. 2022). Positing that computational functions are sufficient for consciousness would not get us far if we had no idea which functions matter; but these theories give us valuable indications.

Scientific theories of consciousness are different from metaphysical theories of consciousness. Metaphysical theories of consciousness make claims about how consciousness relates to the material world in the most general sense. Positions in the metaphysics of consciousness include property dualism (Chalmers 1996, 2002), panpsychism (Strawson 2006, Goff 2017), materialism (Tye 1995, Papineau 2002) and illusionism (Frankish 2016). For example, materialism claims that phenomenal properties are physical properties while property dualism denies this. In contrast, scientific theories of consciousness make claims about which specific material phenomena—usually brain processes—are associated with consciousness. Some explicitly aim to identify the neural correlates of conscious states (NCCs), defined as the minimal sets of neural events which are jointly sufficient for those states (Crick & Koch 1990, Chalmers 2000). The central question for scientific theories of consciousness is what distinguishes cases in which conscious experience arises from

4 One influential argument is by Chalmers (1995): if a person’s neurons were gradually replaced by functionally-equivalent artificial prostheses, their behaviour would stay the same, so it is implausible that they would undergo any radical change in conscious experience (if they did, they would act as though they hadn’t noticed).

those in which it does not, and while this is not the only question such theories might address, it is the focus of this report.

We discuss several specific scientific theories in detail in section 2. Here, we provide a brief overview of the methods of consciousness science to show that consciousness can be studied scientifically.

The scientific study of consciousness relies on assumptions about links between consciousness and behaviour (Irvine 2013). For instance, in a study on vision, experimenters might manipulate a visual stimulus (e.g. a red triangle) in a certain way—say, by flashing it at two different speeds. If they find that subjects report seeing the stimulus in one condition but not in the other, they might argue that subjects have a conscious visual experience of the stimulus in one condition but not in the other. They could then measure differences in brain activity between the two conditions, and draw inferences about the relationships between brain activity and consciousness—a method called “contrastive analysis” (Baars 1988). This method relies on the assumption that subjects’ reports are a good guide to their conscious experiences.

As a method for studying consciousness in humans and other animals, relying on subjects’ reports has two main problems. The first problem is uncertainty about the relationship between conscious experience, reports and cognitive processes which may be involved in making reports, such as attention and memory. Inasmuch as reports or reportability require more processing than conscious experience, studies that rely on reports may be misleading: brain processes which are involved in processing the stimulus and making reports, but are not necessary for consciousness, could be misidentified as among the neural correlates of consciousness (Aru et al. 2012). Another possibility is that phenomenal consciousness may have relatively rich contents, of which only a proportion are selected by attention for further processing yielding cognitive access, which is, in turn, necessary for report. In this case, relying on reports may lead us to misidentify the neural basis of access as that of phenomenal consciousness (Block 1995, 2007). The methodological problem here is arguably more severe because it is an open question whether phenomenal consciousness “overflows” cognitive access in this way—researchers have conflicting views (Phillips 2018a).

A partial solution to this problem may be the use of “no-report paradigms”, in which indicators of consciousness other than reports are used, having been calibrated for correlation with consciousness in separate experiments, which do use reports (Tsuchiya et al. 2015). The advantage of this paradigm is that subjects are not required to make reports in the main experiments, which may mitigate the problem of report confounds. No-report paradigms are not a “magic bullet” for this problem (Block 2019, Michel & Morales 2020), but they may be an important step in addressing it.

Another possible method for measuring consciousness is the use of metacognitive judgments such as confidence ratings (e.g. Peters & Lau 2015). For example, subjects might be asked how confident they are in an answer about a stimulus, e.g. about whether a briefly-presented stimulus was oriented vertically or horizontally. The underlying thought here is that subjects’ ability to track the accuracy of their responses using confidence ratings (known as metacognitive sensitivity) depends on their being conscious of the relevant stimuli. Again, this method is imperfect, but it has some advantages over asking subjects to report their conscious experiences (Morales & Lau 2021; Michel 2022). There are various potential confounds in consciousness science, but researchers can combine evidence from studies of different kinds to reduce the force of methodological objections (Lau 2022).

The second problem with the report approach is that there are presumably some subjects of conscious experience who cannot make reports, including non-human animals, infants and people with certain kinds of cognitive disability. This problem is perhaps most pressing in the case of nonhuman animals, because if we knew more about consciousness in animals—especially those which are relatively unlike us—we might have a far better picture of the range of brain processes that are correlated with consciousness. This difficult problem has recently received increased attention (e.g. Birch 2022b). However, although current scientific theories of consciousness are primarily based on data from healthy adult humans, it can still be highly instructive to examine whether AI systems use processes similar to those described by these theories.

Box 2: Metaphysical theories and the science of consciousness

Major positions in the metaphysics of consciousness include materialism, property dualism, panpsychism and illusionism (for a detailed and influential overview, see Chalmers 2002).

Materialism claims that consciousness is a wholly physical phenomenon. Conscious experiences are states of the physical world—typically brain states—and the properties that make up the phenomenal character of our experiences, known as phenomenal properties, are physical properties of these states. For example, a materialist might claim that the experience of seeing a red tulip is a particular brain state and that the “redness” of the experience is a feature of that state.

Property dualism denies materialism, claiming that phenomenal properties are non-physical properties. Unlike substance dualism, this view claims that there is just one sort of substance or entity while asserting that it has both physical and phenomenal properties. The “redness” of the experience of seeing the tulip may be a property of the brain state involved, but it is distinct from any physical property of this state.

Panpsychism claims that phenomenal properties, or simpler but related “proto-phenomenal” properties, are present in all fundamental physical entities. A panpsychist might claim that an electron, as a fundamental particle, has either a property like the “redness” of the tulip experience or a special precursor of this property. Panpsychists do not generally claim that everything has conscious experiences—instead, the phenomenal aspects of fundamental entities only combine to give rise to conscious experiences in a few macro-scale entities, such as humans.

Illusionism claims that we are subject to an illusion in our thinking about consciousness and that either consciousness does not exist (strong illusionism), or we are pervasively mistaken about some of its features (weak illusionism). However, even strong illusionism acknowledges the existence of “quasi-phenomenal” properties, which are properties that are misrepresented by introspection as phenomenal. For example, an illusionist might say that when one seems to have the conscious experience of seeing a red tulip, some brain state is misrepresented by introspection as having a property of phenomenal “redness”.

Importantly, there is work for the science of consciousness to do on all four of these metaphysical positions. If materialism is true, then some brain states are conscious experiences and others are not, and the role of neuroscience is to find out what distinguishes them. Similarly, property dualism and panpsychism both claim that some brain states but not others are associated with conscious experiences, and are compatible with the claim that this difference can be investigated scientifically. According to illusionism, neuroscience can explain why the illusion of consciousness arises, and in particular why it arises in connection with some brain states but not others.

1.2.3 Theory-heavy approach

In section 1.2.1 we adopted computational functionalism, the thesis that implementing certain computational processes is necessary and sufficient for consciousness, as a working hypothesis, and in section 1.2.2 we noted that there are scientific theories that aim to describe correlations between computational processes and consciousness. Combining these two points yields a promising method for investigating consciousness in AI systems: we can observe whether they use computational processes which are similar to those described in scientific theories of consciousness, and adjust our assessment accordingly. To a first approximation, our confidence that a given system is conscious can be determined by (a) the similarity of its computational processes to those posited by a given scientific theory of consciousness, (b) our confidence in this theory, (c) and our confidence in computational functionalism.5 Considering multiple theories can then give a fuller picture. This method represents a “theory-heavy” approach to investigating consciousness in AI.

The term “theory-heavy” comes from Birch (2022b), who considers how we can scientifically investigate consciousness in non-human animals, specifically invertebrates.

Birch argues against using the theory-heavy approach in this case. One of Birch’s objections is that the evidence from humans that supports scientific theories does not tell us how much their conditions can be relaxed while still being sufficient for consciousness (see also Carruthers 2019). That is, while we might have good evidence that some process is sufficient for consciousness in humans, this evidence will not tell us whether a process in another animal, which is similar in some respects but not others, is also sufficient for consciousness. To establish this we would need antecedent evidence about which non-human animals or systems are conscious—unfortunately, the very question we are uncertain about.

Another way of thinking about this problem is in terms of how we should interpret theories of consciousness. As we will see throughout this report, it is possible to interpret theories either in relatively restrictive ways, as claiming only that very specific features found in humans are sufficient for consciousness, or as giving much more liberal, abstract conditions, which may be met by surprisingly simple artificial systems (Shevlin 2021). Moderate interpretations which strike a balance between appealing generality (consciousness is not just this very specific process in the human brain) and unintuitive liberality (consciousness is not a property satisfied by extremely simple systems) are attractive, but it is not clear that these have empirical support over the alternatives.

5 The theory may entail computational functionalism, in which case (c) would be unnecessary. But we find it helpful to emphasise that if computational functionalism is a background assumption in one’s construal of a theory, one should take into account both uncertainty about this assumption, and uncertainty about the specifics of the theory.

While this objection does point to an important limitation of theory-heavy approaches, it does not show that a theory-heavy approach cannot give us useful information about consciousness in AI. Some AI systems will use processes that are much more similar to those identified by theories of consciousness than others, and this objection does not count against the claim that those using more similar processes are correspondingly better candidates for consciousness. Drawing on theories of consciousness is necessary for our investigation because they are the best available guide to the features we should look for. Investigating animal consciousness is different because we already have reasons to believe that animals that are more closely related to humans and display more complex behaviours are better candidates for consciousness. Similarities in cognitive architecture can be expected to be substantially correlated with phylogenetic relatedness, so while it will be somewhat informative to look for these similarities, this will be less informative than in the case of AI. 6

The main alternative to the theory-heavy approach for AI is to use behavioural tests that purport to be neutral between scientific theories. Behavioural tests have been proposed specifically for consciousness in AI (Elamrani & Yampolskiy 2019). One interesting example is Schneider’s (2019) Artificial Consciousness Test, which requires the AI system to show a ready grasp of consciousness-related concepts and ideas in conversation, perhaps exhibiting “problem intuitions” like the judgement that spectrum inversion is possible (Chalmers 2018). The Turing test has also been proposed as a test for consciousness (Harnad 2003).

In general, we are sceptical about whether behavioural approaches to consciousness in AI can avoid the problem that AI systems may be trained to mimic human behaviour while working in very different ways, thus “gaming” behavioural tests (Andrews & Birch 2023). Large language modelbased conversational agents, such as ChatGPT, produce outputs that are remarkably human-like in some ways but are arguably very unlike humans in the way they work. They exemplify both the possibility of cases of this kind and the fact that companies are incentivised to build systems that can mimic humans.7 Schneider (2019) proposes to avoid gaming by restricting the access of systems to be tested to human literature on consciousness so that they cannot learn to mimic the way we talk about this subject. However, it is not clear either whether this measure would be sufficient, or whether it is possible to give the system enough access to data that it can engage with the test, without giving it so much as to enable gaming (Udell & Schwitzgebel 2021).

6 Birch (2022b) advocates a “theory-light” approach, which has two aspects: (1) rejecting the idea that we should assess consciousness in non-human animals by looking for processes that particular theories associate with consciousness; and (2) not committing to any particular theory now, but aiming to develop better theories in the future when we have more evidence about animal (and perhaps AI) consciousness. Our approach is “theory-heavy” in the sense that, in contrast with the first aspect of the theory-light approach, we do assess AI systems by looking for processes that scientific theories associate with consciousness. However, like Birch, we do not commit to any one theory at this time. More generally, Birch’s approach makes recommendations about how the science of consciousness should be developed, whereas we are only concerned with what kind of evidence should be used to make assessments of consciousness in AI systems now, given our current knowledge.

7 See section 4.1.2 for discussion of the risk that there may soon be many non-conscious AI systems that seem conscious to users.

2 Scientific Theories of Consciousness

In this section, we survey a selection of scientific theories of consciousness, scientific proposals which are not exactly theories of consciousness but which bear on our project, and other claims from scientists and philosophers about putatively necessary conditions for consciousness. From these theories and proposals, we aim to extract a list of indicators of consciousness that can be applied to particular AI systems to assess how likely it is that they are conscious.8 Because we are looking for indicators that are relevant to AI, we discuss possible artificial implementations of theories and conditions for consciousness at points in this section. However, we address this topic in more detail in section 3, which is about what it takes for AI systems to have the features that we identify as indicators of consciousness.

Sections 2.1-2.3 cover recurrent processing theory, global workspace theory and higher-order theories of consciousness—with a particular focus in 2.3 on perceptual reality monitoring theory. These are established scientific theories of consciousness that are compatible with our computational functionalist framework. Section 2.4 discusses several other scientific theories, along with other proposed conditions for consciousness, and section 2.5 gives our list of indicators.

We do not aim to adjudicate between the theories which we consider in this section, although we do indicate some of their strengths and weaknesses. We do not adopt any one theory, claim that any particular condition is definitively necessary for consciousness, or claim that any combination of conditions is jointly sufficient. This is why we describe the list we offer in section 2.5 as a list of indicators of consciousness, rather than a list of conditions. The features in the list are there because theories or theorists claim that they are necessary or sufficient, but our claim is merely that it is credible that they are necessary or (in combination) sufficient because this is implied by credible theories. Their presence in a system makes it more probable that the system is conscious. We claim that assessing whether a system has these features is the best way to judge whether it is likely to be conscious given the current state of scientific knowledge of the subject.

2.1 Recurrent Processing Theory

2.1.1 Introduction to recurrent processing theory

The recurrent processing theory (RPT; Lamme 2006, 2010, 2020) is a prominent member of a group of neuroscientific theories of consciousness that focus on processing in perceptual areas in the brain (for others, see Zeki & Bartels 1998, Malach 2021). These are sometimes referred to as “local” (as opposed to “global”) theories of consciousness because they claim that activity of the right form in relatively circumscribed brain regions is sufficient for consciousness, perhaps provided that certain background conditions are met. RPT is primarily a theory of visual consciousness: it seeks to explain what distinguishes states in which stimuli are consciously seen from those in which they are merely unconsciously represented by visual system activity. The theory

8 A similar approach to the question of AI consciousness is found in Chalmers (2023) which considers several features of LLMs which give us reason to think they are conscious, and several commonly-expressed “defeaters” for LLM consciousness. Many of these considerations are, like our indicators, drawn from scientific theories of consciousness.

claims that unconscious vs. conscious states correspond to distinct stages in visual processing. An initial feedforward sweep of activity through the hierarchy of visual areas is sufficient for some visual operations like extracting features from the scene, but not sufficient for conscious experience. When the stimulus is sufficiently strong or salient, however, recurrent processing occurs, in which signals are sent back from higher areas in the visual hierarchy to lower ones. This recurrent processing generates a conscious representation of an organised scene, which is influenced by perceptual inference—processing in which some features of the scene or percept are inferred from other features. On this view, conscious visual experience does not require the involvement of non-visual areas like the prefrontal cortex, or attention—in contrast with “global” theories like global workspace theory and higher-order theories, which we will consider shortly.

2.1.2 Evidence for recurrent processing theory

The evidence for RPT is of two kinds: the first is evidence that recurrent processing is necessary for conscious vision, and the second is evidence against rival theories which claim that additional processing for functions beyond perceptual organisation is required.

Evidence of the first kind comes from experiments involving backward masking and transcranial magnetic stimulation, which indicate that feedforward activity in the primary visual cortex (the first stage of processing mentioned above) is not sufficient for consciousness (Lamme 2006). Lamme also argues that, although feedforward processing is sufficient for basic visual functions like categorising features, important functions like feature grouping and binding and figure-ground segregation require recurrence. He, therefore, claims that recurrent processing is necessary for the generation of an organised, integrated visual scene—the kind of scene that we seem to encounter in conscious vision (Lamme 2010, 2020).

Evidence against more demanding rival theories includes results from lesion and brain stimulation studies suggesting that additional processing in the prefrontal cortex is not necessary for conscious visual perception. This counts against non-”local” views insofar as they claim that functions in the prefrontal cortex are necessary for consciousness (Malach 2022; for a countervailing analysis see Michel 2022). Proponents of RPT also argue that the evidence used to support rival views is confounded by experimental requirements for downstream cognitive processes associated with making reports. The idea is that when participants produce the reports (and other behavioural responses) that are used to indicate conscious perception, this requires cognitive processes that are not themselves necessary for consciousness. So where rival theories claim that downstream processes are necessary for consciousness, advocates of RPT and similar theories respond that the relevant evidence is explained by confounding factors (see the methodological issues discussed in section 1.2.2).

2.1.3 Indicators from recurrent processing theory

There are various possible interpretations of RPT that have different implications for AI consciousness. For our purposes, a crucial issue is that the claim that recurrent processing is necessary for

consciousness can be interpreted in two different ways. In the brain, it is common for individual neurons to receive inputs that are influenced by their own earlier outputs, as a result of feedback loops from connected regions. However, a form of recurrence can be achieved without this structure: any finite sequence of operations by a network with feedback loops can be mimicked by a suitable feedforward network with enough layers. To achieve this, the feedforward network would have multiple

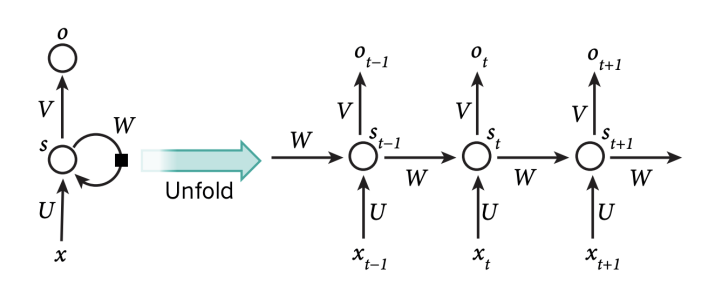

Figure 1: An unfolded recurrent neural network as depicted in LeCun, Bengio, & Hinton (2015). Attribution-Share Alike 4.0 International.

layers with shared weights, so that the same operations would be performed repeatedly—thus mimicking the effect of repeated processing of information by a single set of neurons, which would be produced by a network with feedback loops (Savage 1972, LeCun et al. 2015). In current AI, recurrent neural networks are implemented indistinguishably from deep feedforward networks in which layers share weights, with different groups of input nodes for successive inputs feeding into the network at successive layers.

We will say that networks with feedback loops such as those in the brain, which allow individual physically-realised neurons to process information repeatedly, display implementational recurrence. However, deep feedforward networks with weight-sharing display only algorithmic recurrence—they are algorithmically similar to implementationally recurrent networks but have a different underlying structure. So there are two possible interpretations of RPT available here: it could be interpreted either as claiming that consciousness requires implementational recurrence, or as making only the weaker claim that algorithmic recurrence is required. Doerig et al. (2019) interpret RPT as claiming that implementational recurrence is required for consciousness and criticise it for this claim. However, in personal communication, Lamme has suggested to us that RPT can also be given the weaker algorithmic interpretation.

Implementational and algorithmic recurrence are both possible indicators of consciousness in AI, but we focus on algorithmic recurrence. It is possible to build an artificial system that displays implementational recurrence, but this would involve ensuring that individual neurons were physically realised by specific components in the hardware. This would be a very different approach from standard methods in current AI, in which neural networks are simulated without using specific hardware components to realise each component of the network. An implementational recurrence indicator would therefore be less relevant to our project, so we do not adopt this indicator.

Using algorithmic recurrence, in contrast, is a weak condition that many AI systems already meet. However, it is non-trivial, and we argue below that there are other reasons, besides the evidence for RPT, to believe that algorithmic recurrence is necessary for consciousness. So we adopt this as our first indicator:

RPT-1: Input modules using algorithmic recurrence

This is an important indicator because systems that lack this feature are significantly worse candidates for consciousness.

RPT also suggests a second indicator, because it may be interpreted as claiming that it is sufficient for consciousness that algorithmic recurrence is used to generate integrated perceptual representations of organised, coherent scenes, with figure-ground segregation and the representation of objects in spatial relations. This second indicator is:

RPT-2: Input modules generating organised, integrated perceptual representations

An important contrast for RPT is between the functions of feature extraction and perceptual organisation. Features in visual scenes can be extracted in unconscious processing in humans, but operations of perceptual organisation such as figure-ground segregation may require conscious vision; this is why RPT-2 stresses organised, integrated perceptual representations.

There are also two further possible interpretations of RPT, which we set aside for different reasons. First, according to the biological interpretation of RPT, recurrent processing in the brain is necessary and sufficient for consciousness because it is associated with certain specific biological phenomena, such as recruiting particular kinds of neurotransmitters and receptors which facilitate synaptic plasticity. This biological interpretation is suggested by some of Lamme’s arguments (and was suggested to us by Lamme in personal communication): Lamme (2010) argues that there could be a “fundamental neural difference” between feedforward and recurrent processing in the brain and that we should expect consciousness to be associated with a “basic neural mechanism”. We set this interpretation aside because if some particular, biologically-characterised neural mechanism is necessary for consciousness, artificial systems cannot be conscious.

Second, RPT may be understood as a theory only of visual consciousness, which makes no commitments about what is necessary or sufficient for consciousness more generally. On this interpretation, RPT would leave open both: (i) whether non-visual conscious experiences require similar processes to visual ones, and (ii) whether some further background conditions, typically met in humans but not specified by the theory, must be met even for visual consciousness. This interpretation of the theory is reasonable given that the theory has not been extended beyond vision and that it is doubtful whether activity in visual brain areas sustained in vitro would be sufficient for consciousness (Block 2005). But on this interpretation, RPT would have very limited implications for AI.

2.2 Global Workspace Theory

2.2.1 Introduction to global workspace theory

The global workspace theory of consciousness (GWT) is founded on the idea that humans and other animals use many specialised systems, often called modules, to perform cognitive tasks of particular kinds. These specialised systems can perform tasks efficiently, independently and in parallel. However, they are also integrated to form a single system by features of the mind which allow them to share information. This integration makes it possible for modules to operate together in co-ordinated and flexible ways, enhancing the capabilities of the system as a whole. GWT claims that one way in which modules are integrated is by their common access to a “global workspace”—a further “space” in the system where information can be represented. Information represented in the global workspace can influence activity in any of the modules. The workspace has a limited capacity, so an ongoing process of competition and selection is needed to determine what is represented there.

GWT claims that what it is for a state to be conscious is for it to be a representation in the global workspace. Another way to express this claim is that states are conscious when they are “globally broadcast” to many modules, through the workspace. GWT was introduced by Baars (1988) and has been elaborated and defended by Dehaene and colleagues, who have developed a neural version of the theory (Dehaene et al. 1998, 2003, Dehaene & Naccache 2001, Dehaene & Changeux 2011, Mashour et al. 2020). Proponents of GWT argue that the global workspace explains why some privileged subset of perceptual (and other) representations are available at any given time for functions such as reasoning, decision-making and storage in episodic memory. Perceptual representations get stronger due to the strength of the stimulus or are amplified by attention because they are relevant to ongoing tasks; as a result, these representations “win the contest” for entry to the global workspace. This allows them to influence processing in modules other than those that produced them.

The neural version of GWT claims there is a widely distributed network of “workspace neurons”, originating in frontoparietal areas, with activity in this network, which is sustained by recurrent processing, constituting conscious representations. When perceptual representations become sufficiently strong, a process called “ignition” takes place in which activity in the workspace neurons comes to code for their content. Ignition is a step-function, so whether a given representation is broadcast, and, therefore, conscious, is not a matter of degree.

GWT is typically presented as a theory of access consciousness—that is, of the phenomenon that some information represented in the brain, but not all, is available for rational decision-making. However, it can also be interpreted as a theory of phenomenal consciousness, motivated by the thought that access consciousness and phenomenal consciousness may coincide, or even be the same property, despite being conceptually distinct (Carruthers 2019). Since our topic is phenomenal consciousness, we interpret the theory in this way. It is notable that although GWT does not explicitly require agency, it can only explain access consciousness if the system is a rational agent since access consciousness is defined as availability for rational control of action (we discuss agency in section 2.4.5)

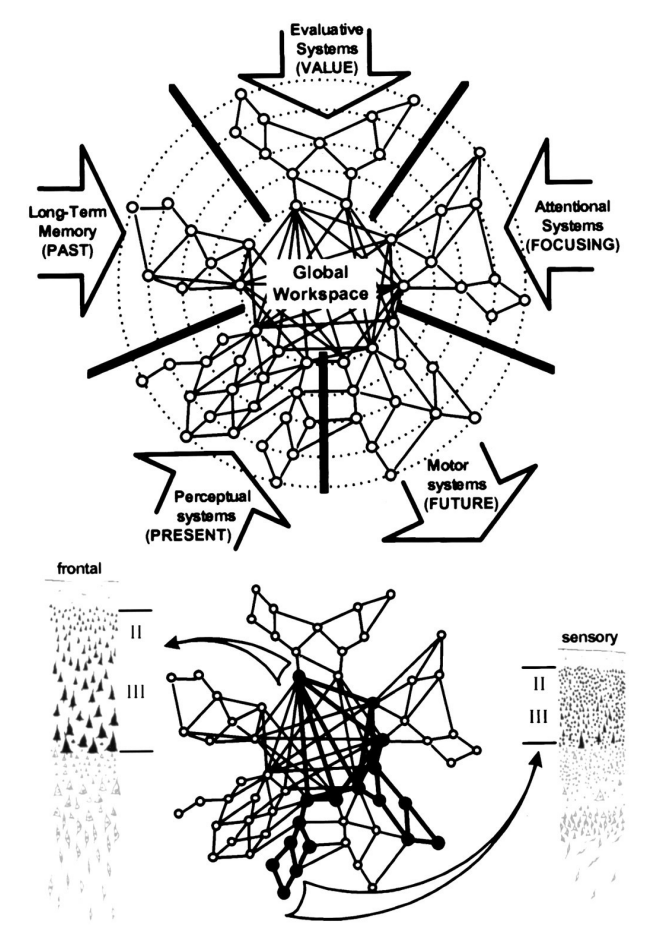

Figure 2: Global Workspace. The figure used by Dehaene et al. (1998) to illustrate the basic idea of a global workspace. Note that broadcast to a wide range of consumer systems such as planning, reasoning and verbal report does not feature in the figure. 1998. National Academy of Sciences. Reprinted with permission.

2.2.2 Evidence for global workspace theory

There is extensive evidence for global workspace theory, drawn from many studies, of which we can mention only a few representative examples (see Dehaene 2014 and Mashour et al. 2020 for reviews). These studies generally employ the method of contrastive analysis, in which brain activity is measured and a comparison is made between conscious and unconscious conditions, with efforts made to control for other differences. Various stimuli and tasks are used to generate the conscious and unconscious conditions, and activity is measured using fMRI, MEG, EEG, or single-cell recordings. According to GWT advocates, these studies show that conscious perception is associated with reverberant activity in widespread networks which include the prefrontal cortex (PFC)—this claim contrasts with the “local” character of RPT discussed above—whereas unconscious states involve more limited activity confined to particular areas. This widespread activity seems to arise late in perceptual processing, around 250-300ms after stimulus onset, supporting the claim that global broadcast requires sustained perceptual representations (Mashour et al. 2020).

Examples of recording studies in monkeys that support a role for PFC in consciousness include experiments by Panagiotaropoulos et al. (2012) and van Vugt et al. (2018). In the former study, researchers were able to decode the presumed content of conscious experience during binocular rivalry from activity in PFC (Panagiotaropoulos et al. 2012). In this study the monkeys viewed stimuli passively—in contrast with many studies supporting GWT—so the results are not confounded by behavioural requirements (this was a no-report paradigm; see sections 1.2.2 and 2.1.2). In the latter, activity was recorded from the visual areas V1 and V4 and dorsolateral PFC, while monkeys performed a task requiring them to respond to weak visual stimuli with eye movements. The monkeys were trained to move their gaze to a default location if they did not see the stimulus and to a different location if they did. Seen stimuli were associated with stronger activity in V1 and V4 and late, substantial activity in PFC. Importantly, while early visual activity registered the objective presence of the stimulus irrespective of the animal’s response, PFC activity seemed to encode the conscious percept, as this activity was also present in false alarms—cases in which monkeys acted as though they had seen a stimulus even though no stimulus was present. Activity associated with unseen stimuli tended to be lost in transmission from V1 through V4, to PFC. Studies on humans using different measurement techniques have similarly found that conscious experience is associated with ignition-like activity patterns and decodability from PFC (e.g. Salti et al. 2015).

2.2.3 Indicators from global workspace theory

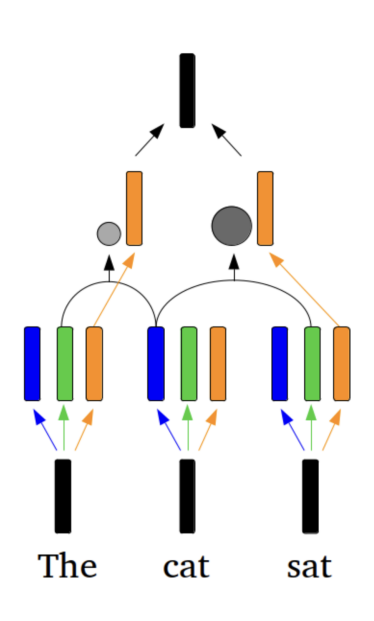

We want to identify the conditions which must be met for a system to be conscious, according to GWT, because these conditions will be indicators of consciousness in artificial systems. This means that a crucial issue is exactly what it takes for a system to implement a global workspace. Several authors have noted that it is not obvious how similar a system must be to the human mind, in respect of its workspace-like features, to have the kind of global workspace that is sufficient, in context, for consciousness (Bayne 2010, Carruthers 2019, Birch 2022b, Seth & Bayne 2022). There are perhaps four aspects to this problem. First, workspace-like architectures could be used with a variety of different combinations of modules with different capabilities; as Carruthers (2019) points out, humans have a rich and specific set of capabilities that seem to be facilitated by the workspace and may not be shared with other systems. So one question is whether some specific set of modules accessing the workspace is required for workspace activity to be conscious. Second, it’s unclear what degree of similarity a process must bear to selection, ignition and broadcasting in the human brain to support consciousness. Third, it is difficult to know what to make of possible systems which use workspace-like mechanisms but in which there are multiple workspaces—perhaps integrating overlapping sets of modules—or in which the workspaces are not global, in the sense that they do not integrate all modules. And fourth, there are arguably two stages involved in global broadcast—selection for representation in the workspace, and uptake by consumer modules—in which case there is a question about which of these makes particular states conscious.

Although these questions are difficult, it is possible that empirical evidence could be brought to bear on them. For example, studies on non-human animals could help to identify a natural kind that includes the human global workspace and facilitates consciousness-linked abilities (Birch 2020). Reflection on AI can also be useful here because we can recognise functional similarities and dissimilarities between actual or possible systems and the hypothesised global workspace, separately from the range of modules in the system or the details of neurobiological implementation, and thus develop a clearer sense of the possible functional kinds in this area.