AdaGrad?

Stochastic Gradient Descent(SGD)에서 gradient가 dimension 별로 너무 차이가 크다면, 이를 맞춰주자!

→AdaGrad(Adaptive, Gradient?)

Code

epsilon = 1e-7 grad_squared = 0 while True: dx = compute_gradient(x) grad_squared += dx * dx x -= learning_rate * dx / (np.sqrt(grad_squared)+ epsilon)

- added element-wise scaling of the gradient based on the historical sum of squared in each dimension

- “per-parameter learning rates” or “adaptive lr”

Example

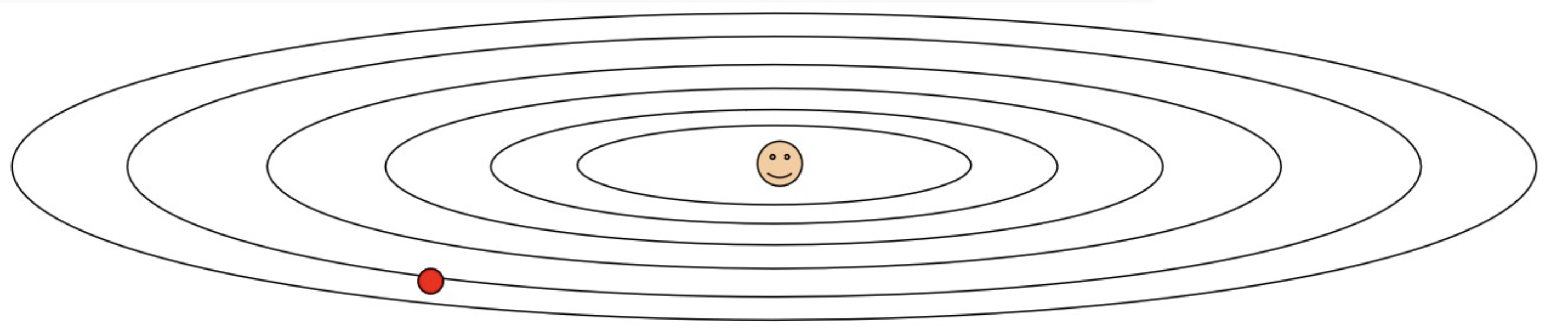

Q1: What happens with AdaGrad?

- “steep” direction은 damped.

- “flat” direction은 accelerated.

- 루트 들어간 분모항을 관찰!

Q2: What happend to the step size over long time?

- Decay to 0.

→ 이 문제를 해결한 게 RMSProp